Back to LING 385

Lecture 19

- neural networks are for learning better representations

Review: Transformer (LLM) General Structure

- an LLM has many encoders

- each encoder develops better representation thatn the one before

- does so by having ensemble of NNs evaluate information, then combining info from all of them

Languages have two important kinds of structure:

- hierarchical/tree structure relating words

- word, i.e., what word comes first.

- transformers separate these two kinds of structure

- GPT LLMs predict each word from all previous words

in order to do so, needs to know:

- hierarchical relation between each word and each other word

- position of each word

Sentence Input to the LLM

- run one-hot encoding of each word through embedding NN to get embedding vectors

- calculate positional encoding for each word

- word vector = embedding + positional encoding

- for LLM to "understand" the sentence, it will pass thru encoder sequences to that better representations are gradually found

- representations compute relation between each word, regardless of their positions

- this is called syntactic distance

Syntactic Distance

- tree siblings are closer than tree cousins, which are closer than second cousings, etc

To find cognitive/syntactic distance:

- take any two words

- find the lowest node in the tree that dominates them both

- measure the number of nodes in the route between the two objects (including lowest node dominating both of them)

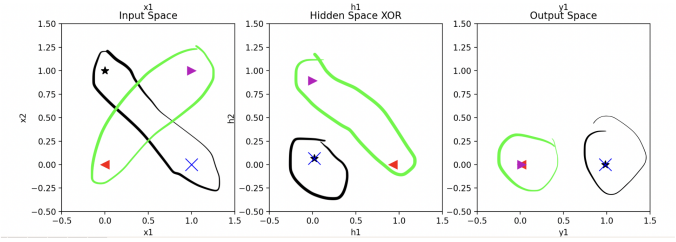

What GPT accomplishes (approx)

- now, imagine that each word has a vector associated with it

- between any two vectors, we can measure the IP

- IP measures vector distance

- if we can move vectors such that IP increasingly reflects hierarchical distances, vectors represent both words AND syntax trees through interdistances

.png)

Positional Encodings

- each word is represented by a wave

- number of maxima in the wave representing the word order

- discovered by Vaswani et al. (2017), paper at the start of LLMs

.png)